这个练习篇主要记录的是我在学习《机器学习》阶段所练习过的竞赛练习项目。

按时间排序。

你可以在Here查看我关于机器学习的笔记。

SF-公共自行车使用量预测 10k

问题简述

回归问题,10k级数据量,较小。主要是看看已有的模型,自己尝试运行一下,了解基本函数的作用。

比赛地址:http://sofasofa.io/competition.php?id=1#c1

比赛信息:

本比赛为个人练习赛,主要针对于于数据新人进行自我练习、自我提高,与大家切磋。

练习赛时限:2017-05-06 至 2019-05-06

任务类型:回归

背景介绍:

公共自行车低碳、环保、健康,并且解决了交通中“最后一公里”的痛点,在全国各个城市越来越受欢迎。本练习赛的数据取自于两个城市某街道上的几处公共自行车停车桩。我们希望根据时间、天气等信息,预测出该街区在一小时内的被借取的公共自行车的数量。数据来源:

Laboratory of Artificial Intelligence and Decision Support (LIAAD), University of Porto, Portugal。为了公平起见,数据已经进行脱敏加工处理。标题图片来源:36氪。

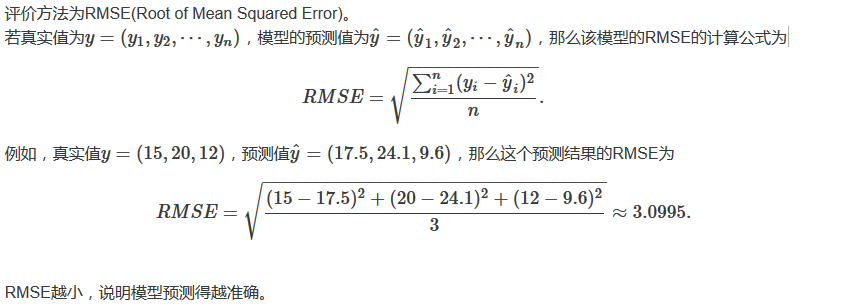

评价方法

代码

线性回归、xgboost回归、决策树代码摘自比赛网站。为公开代码。

线性回归 39.132

注意:https://segmentfault.com/a/1190000000322433

在python2中,map会直接返回结果,比如说:

可以直接返回

但是到了python3, 返回的就是一个map对象:

如果要得到结果,必须用list作用于这个map对象。

如果说计算结果已经出来了,只是要用list来打印结果,那就算了。

1 | # -*- coding: utf-8 -*- |

岭回归 39.132

1 | # -*- coding: utf-8 -*- |

贝叶斯岭回归

1 | # -*- coding: utf-8 -*- |

Lasso回归 39.294

1 | # -*- coding: utf-8 -*- |

决策树 28.818

1 | # -*- coding: utf-8 -*- |

xgboost回归 18.947

1 | # -*- coding: utf-8 -*- |

更多

None.

SF-交通事故理赔审核 100k

比赛地址:http://sofasofa.io/competition.php?id=2#c1

问题简述

训练集中共有200000条样本,预测集中有80000条样本。

比赛概述

本比赛为个人练习赛,适用于入门二元分类模型,主要针对于数据新人进行自我练习、自我提高,与大家切磋。

练习赛时限:2017-06-03 至 2019-06-03

任务类型:二元分类

背景介绍:

在交通摩擦(事故)发生后,理赔员会前往现场勘察、采集信息,这些信息往往影响着车主是否能够得到保险公司的理赔。训练集数据包括理赔人员在现场对该事故方采集的36条信息,信息已经被编码,以及该事故方最终是否获得理赔。我们的任务是根据这36条信息预测该事故方没有被理赔的概率。

数据来源:

我们低调的合作方——某汽车大数据网站。标题图片来源:搜狐汽车。

评价方法

你的提交结果为每个测试样本未通过审核的概率,也就是Evaluation为1的概率。评价方法为精度-召回曲线下面积(Precision-Recall AUC),以下简称PR-AUC。

PR-AUC的取值范围是0到1。越接近1,说明模型预测的结果越接近真实结果。

精度和召回的定义和计算方式可参考问题:什么是混淆矩阵?中的回答。

PR-AUC的定义可参考问题:精度-召回AUC是什么?

PR-AUC的计算方法可以参考问题:Python里如何计算召回精度AUC?

代码

Lasso逻辑回归、随机森林分类模型为标杆模型。

逻辑回归 0.632113

1 | # -*- coding: utf-8 -*- |

Lasso逻辑回归 0.714644

1 | # -*- coding: utf-8 -*- |

随机森林分类模型 0.850897

1 | # -*- coding: utf-8 -*- |

更多

Kaggle-泰坦尼克Titanic: Disaster

问题简述

二分类。1k数据。

比赛地址:https://www.kaggle.com/c/titanic

比赛信息:

Competition Description

The sinking of the RMS Titanic is one of the most infamous shipwrecks in history. On April 15, 1912, during her maiden voyage, the Titanic sank after colliding with an iceberg, killing 1502 out of 2224 passengers and crew. This sensational tragedy shocked the international community and led to better safety regulations for ships.

One of the reasons that the shipwreck led to such loss of life was that there were not enough lifeboats for the passengers and crew. Although there was some element of luck involved in surviving the sinking, some groups of people were more likely to survive than others, such as women, children, and the upper-class.

In this challenge, we ask you to complete the analysis of what sorts of people were likely to survive. In particular, we ask you to apply the tools of machine learning to predict which passengers survived the tragedy.

Practice Skills

- Binary classification

- Python and R basics

BUG:

代码

1 |

更多

Kaggle机器学习入门实战 — Titanic乘客生还预测

Kaggle Titanic 生存预测 — 详细流程吐血梳理

Kaggle Titanic Supervised Learning Tutorial

Kaggle-房价预测House Prices

问题简述

比赛地址:https://www.kaggle.com/c/house-prices-advanced-regression-techniques

Start here if…

You have some experience with R or Python and machine learning basics. This is a perfect competition for data science students who have completed an online course in machine learning and are looking to expand their skill set before trying a featured competition.

Competition Description

Ask a home buyer to describe their dream house, and they probably won’t begin with the height of the basement ceiling or the proximity to an east-west railroad. But this playground competition’s dataset proves that much more influences price negotiations than the number of bedrooms or a white-picket fence.

With 79 explanatory variables describing (almost) every aspect of residential homes in Ames, Iowa, this competition challenges you to predict the final price of each home.

Practice Skills

- Creative feature engineering

- Advanced regression techniques like random forest and gradient boosting

Acknowledgments

The Ames Housing dataset was compiled by Dean De Cock for use in data science education. It’s an incredible alternative for data scientists looking for a modernized and expanded version of the often cited Boston Housing dataset.

代码

1 |

更多

https://www.kaggle.com/neviadomski/how-to-get-to-top-25-with-simple-model-sklearn

Kaggle-数字识别Digit Recognition

比赛地址:https://www.kaggle.com/c/digit-recognizer

问题简述

Start here if…

You have some experience with R or Python and machine learning basics, but you’re new to computer vision. This competition is the perfect introduction to techniques like neural networks using a classic dataset including pre-extracted features.

Competition Description

MNIST (“Modified National Institute of Standards and Technology”) is the de facto “hello world” dataset of computer vision. Since its release in 1999, this classic dataset of handwritten images has served as the basis for benchmarking classification algorithms. As new machine learning techniques emerge, MNIST remains a reliable resource for researchers and learners alike.

In this competition, your goal is to correctly identify digits from a dataset of tens of thousands of handwritten images. We’ve curated a set of tutorial-style kernels which cover everything from regression to neural networks. We encourage you to experiment with different algorithms to learn first-hand what works well and how techniques compare.

Practice Skills

- Computer vision fundamentals including simple neural networks

- Classification methods such as SVM and K-nearest neighbors

Acknowledgements

More details about the dataset, including algorithms that have been tried on it and their levels of success, can be found at http://yann.lecun.com/exdb/mnist/index.html. The dataset is made available under a Creative Commons Attribution-Share Alike 3.0 license.

代码

kaggle入门| Digit Recognizer准确度100% - 知乎

1 |

更多

https://blog.csdn.net/u012162613/article/details/41929171

DC-“达观杯”文本智能处理挑战赛

问题简述

多分类。100k级数据量。这是第一个练习项目,代码是已有的,只是熟悉数据竞赛的流程。

比赛地址:“达观杯”文本智能处理挑战赛。

比赛信息:

2018年人工智能的发展在运算智能和感知智能已经取得了很大的突破和优于人类的表现。而在以理解人类语言为入口的认知智能上,目前达观数据自然语言处理技术已经可以实现文档自动解析、关键信息提取、文本分类审核、文本智能纠错等一定基础性的文字处理工作,并在各行各业得到充分应用。

自然语言处理一直是人工智能领域的重要话题,而人类语言的复杂性也给 NLP 布下了重重困难等待解决。长文本的智能解析就是颇具挑战性的任务,如何从纷繁多变、信息量庞杂的冗长文本中获取关键信息,一直是文本领域难题。随着深度学习的热潮来临,有许多新方法来到了 NLP 领域,给相关任务带来了更多优秀成果,也给大家带来了更多应用和想象的空间。

此次比赛,达观数据提供了一批长文本数据和分类信息,希望选手动用自己的智慧,结合当下最先进的NLP和人工智能技术,深入分析文本内在结构和语义信息,构建文本分类模型,实现精准分类。未来文本自动化处理的技术突破和应用落地需要人工智能从业者和爱好者的共同努力,相信文本智能处理技术因为你的算法,变得更加智能!

任务:建立模型通过长文本数据正文(article),预测文本对应的类别(class)

数据:

*注 : 报名参赛或加入队伍后,可获取数据下载权限。

数据包含2个csv文件:

- train_set.csv:此数据集用于训练模型,每一行对应一篇文章。文章分别在“字”和“词”的级别上做了脱敏处理。共有四列: 第一列是文章的索引(id),第二列是文章正文在“字”级别上的表示,即字符相隔正文(article);第三列是在“词”级别上的表示,即词语相隔正文(word_seg);第四列是这篇文章的标注(class)。 注:每一个数字对应一个“字”,或“词”,或“标点符号”。“字”的编号与“词”的编号是独立的!

- test_set.csv:此数据用于测试。数据格式同train_set.csv,但不包含class。 注:test_set与train_test中文章id的编号是独立的。 友情提示:请不要尝试用excel打开这些文件!由于一篇文章太长,excel可能无法完整地读入某一行!

BUG:

csv_read()读入时文件较大(1G),python停止工作,但是查看电脑内存最高却使用了不到50%(同时读入时内存激增/飙升)解决方案:解决Python memory error的问题(四种解决方案),似乎没有用!【真】解决方案:(Here)

- 首先分块读

read_csv('xxx.csv', chunksize=XXX) - 然后边读边合并

concat([df, chunk]) - 似乎本质上是因为内存调用过快引起的Python程序崩溃,只要放缓读入速度就能解决问题

- 如果物理内存真的不够,则可以增加虚拟内存(一般设为物理内存的1~3倍)

- 首先分块读

理论解释:内存泄露(Memory leak)

也可能是内存溢出https://stackoverflow.com/questions/38487334/pandas-python-memory-spike-while-reading-3-2-gb-file

根据楼主获得的新解决方案

1

2

3

4

5

6dtypes = {'id': pd.np.int8,

'article':pd.np.str,

'word_seg':pd.np.str,

'class':pd.np.int8}

df_train = pd.read_csv('./train_set.csv', dtype=dtypes)

df_test = pd.read_csv('./test_set.csv', dtype=dtypes)奇怪的是,现在突然原版的

df_train = pd.read_csv('./train_set.csv')也不报错了!WTF!!!读入表现得很平缓。恐怕这是一个不可复现的程序漏洞……

折腾这么久突然自己好了,有毒啊

https://stackoverflow.com/questions/31886939/reduce-memory-usage-pandas/47577606#47577606

pandas似乎有一个自动判断类型的过程,如果不用dtype事先指定,会额外消耗比较多的内存(但我实际测试感觉没什么区别,最后内存最大占用都3个g左右)

- Pandas loads in string columns as object type by default. For all the columns which have the type object, try to assign the type category to these columns by passing a dictionary to parameter dtypes of the read_csv function. Memory usage decreases dramatically for columns with 50% or less unique values.

- Pandas reads in numeric columns as float64 by default. Use pd.to_numeric to downcast float64 type to 32 or 16 if possible. This again saves you memory.

Anyway,这个BUG就像球状闪电)一样消失了……

TypeError: drop() got an unexpected keyword argument ‘columns’

- Pandas版本过低,至少要0.21版本,使用

conda update pandas命令更新- 如果使用了镜像网站(更新源),

可能版本还是不够,使用pip install -U pandas更新

- 如果使用了镜像网站(更新源),

- Pandas版本过低,至少要0.21版本,使用

Keword找不到错误

- 如果在分块的时候,read_csv使用了奇怪的seg=’;‘参数则会出现此异常,从输出来看似乎是csv的表格系统被该分隔符所破坏,删去该参数即可

第1次提交得分:5%(最后运行了40分钟orz)

- 发现代码写错了:xtest = vetorizer.transform(dftest[‘word_seg’])(原来复制粘贴的是train,WTF)

CPU使用率只有25%

- 4核,可能需要改写算法,形成多个thread(More)

代码

逻辑回归+分块读入 73%

1 | import pandas as pd |

注释版:

1 | import pandas as pd |

更多

文本分类任务基本框架:

文本——特征工程(上限)——分类器(效能)——类别。

文本特征提取:

- 经典文本特征(前人研究)

- TF,TF-IDF,Doc2vec,Word2vec

- 手工构造(数据挖掘)

- 寻找可能影响分类的新特征

- 比如,文章的长度

- 相关构造

- 多项式扩展

- 寻找可能影响分类的新特征

- 神经网络提取(利用某一层输出作为文本特征)

- 对于10万量级,传统优于深度学习算法;当百万级数据时,反之。(不绝对)

特征选择:

- 减少维数灾难,计算量降低

- 降低学习任务难度

- 方法:包裹式,嵌入式,过滤式(见西瓜书特征选择部分)

特征降维:

- 有监督降维:LDA(西瓜书第三章)

- 无监督降维:LSA,Ida,NMF

主要分类器:

- 基于sklearn实现

- 逻辑回归

- 简单快速,可解释(优先使用),稀疏特征

- 支持向量机

- 朴素贝叶斯

- 随机森林

- bagging

- 逻辑回归

- Lightgbm(和xgboost一样,效果更好,杀器!基于GBDT算法实现,见《统计学习方法》8.4.3节)

- xgboost

- 神经网络

提分关键:多个单模型进行融合。(必做,见西瓜书第八章)

- 投票法

- 学习法

前提,各个模型好而不同。

保证差异:不同的数据集,不同的分类器。

代码介绍:

https://github.com/MLjian/TextClassificationImplement

有两个方法,机器学习ML,和深度学习DL。

可以先过一遍for beginner。

BD-短视频内容理解与推荐竞赛

比赛地址:https://biendata.com/competition/icmechallenge2019/

问题简述

背景介绍

近年来,机器学习在图像识别、语音识别等领域取得了重大进步,但在视频内容理解领域仍有许多问题需要探索。字节跳动公司旗下的TikTok(抖音海外版)短视频APP在全球范围内的用户中获得非常多的好评,短视频的内容理解与推荐技术成为了我们关注的焦点。

一图胜千言,仅一张图片就包含大量信息,难以用几个词来描述,更何况是短视频这种富媒体形态。面对短视频内容理解的难题,字节跳动作为一家拥有海量短视频素材和上亿级用户行为数据的公司,通过视频内容特征和用户行为数据,可以有充足的数据来预测用户对短视频的喜好。

本次竞赛提供多模态的短视频内容特征,包括视觉特征、文本特征和音频特征,同时提供了脱敏后的用户点击、喜爱、关注等交互行为数据。参赛者需要通过一个视频及用户交互行为数据集对用户兴趣进行建模,然后预测该用户在另一视频数据集上的点击行为。

竞赛最终根据参赛者提交的模型和预测结果,依据评分进行排名,具体见评估准则。

竞赛任务

通过构建深度学习模型,预测测试数据中每个用户id在对应作品id上是否浏览完作品和是否对作品点赞的概率加权结果。 本次比赛使用 AUC(ROC曲线下面积)作为评估指标。AUC 越高,代表结果越优,排名越靠前。

赛道1

大规模数据集,亿级别的数据信息。

赛道2

小规模数据集,千万级别的数据信息。

讨论区

参赛队伍可以在比赛页面的讨论区进行讨论。如有赛题和数据方面的问题,请发邮件至AI-Lab-challenge@bytedance.com。如有竞赛提交方面的问题,请发邮件至support@biendata.com。

数据集

| 名称 | 格式 | 链接 | 提取码 |

|---|---|---|---|

| track1_sample_submission.zip | zip (377.7 MB) | https://pan.baidu.com/s/1QvoRbJMizWZtLvBIT2B9Bg | 8qrs |

| track2_sample_submission.zip | zip (24.5 MB) | https://pan.baidu.com/s/1GeiRfQ0lV7NcC1JSQ5nTMA | 667i |

Baseline

https://github.com/challenge-ICME2019-Bytedance/Bytedance_ICME_challenge

竞赛提供的baseline方法使用到以下5个特征:user_id, user_city, item_id,author_id,item_city

- TRACK2 LIKE TASK:

auc: 86.5%

#————————————params————————————-#

embedding_size = 40

optimizer = adam

lr = 0.0005

- TRACK FINISH TASK:

auc: 69.8%

#————————————params————————————-#

embedding_size = 40

optimizer = adam

lr = 0.0001

主程序:(需要调用其它接口)

1 | import os |

代码

1 |

更多

某某赛(待更新)

比赛地址:

问题简述

代码

1 |